Posted on 2020-09-28 by Matt Strahan in Industry

There’s a fundamental issue with penetration testing that people don’t really talk about very much. It’s not a fun issue to talk about, because it leads to what effectively becomes corruption in the industry, which then leads to the vulnerabilities that are missed being used to cause huge damage to businesses, everyday people, and society.

The issue is simple: there’s no good way to tell whether the penetration test you have had done has found all the vulnerabilities.

This is the first of a three part blog post where I’ll be describing why it’s just so damn hard to validate penetration testing results. In the next post I’ll talk about side channels and ways to at least ensure you’re not getting ripped off, but also how an evil firm might present a good face. Finally in the third post I’ll be talking about three pie-in-the-sky crazy ideas for reforming the industry.

Before I go on I should make it clear that I am in no way saying penetration testing is bad. I do think that there are penetration testers and penetration testing firms that are bad, but a good penetration test is crucial for finding those security vulnerabilities you’re concerned about and keeping you safe.

As long as it’s a good penetration test.

Once upon a time there were two penetration testers…

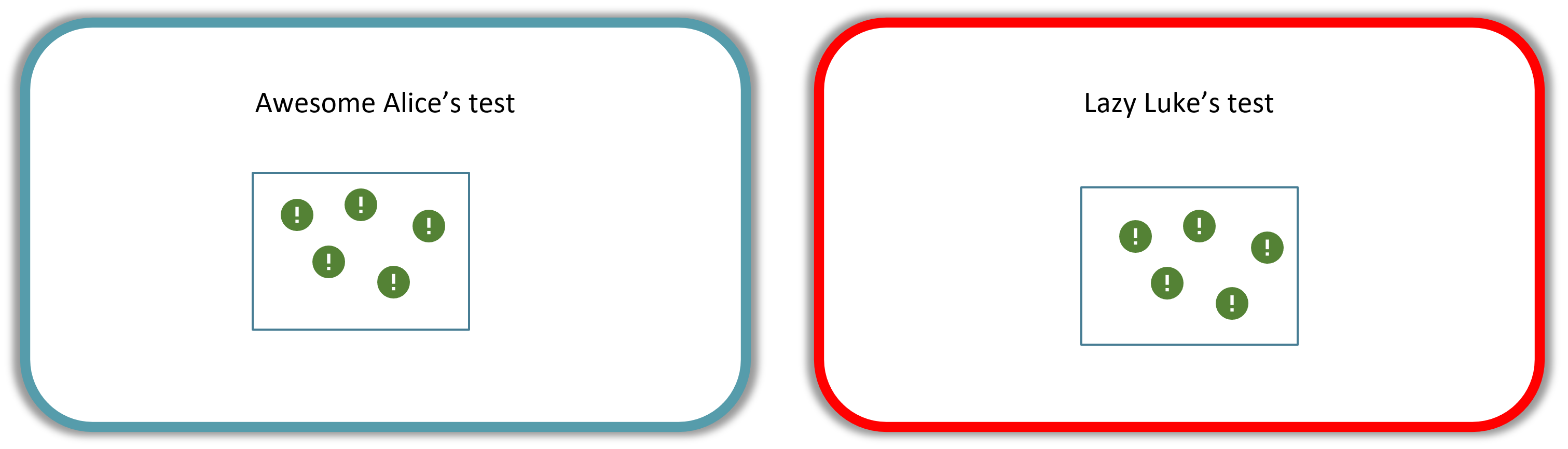

These two penetration testers are helpfully named Awesome Alice and Lazy Luke. They each have a system to test.

Awesome Alice is testing a relatively secure system that has only 5 vulnerabilities.

Lazy Luke is testing an extremely insecure system that has 25 vulnerabilities!

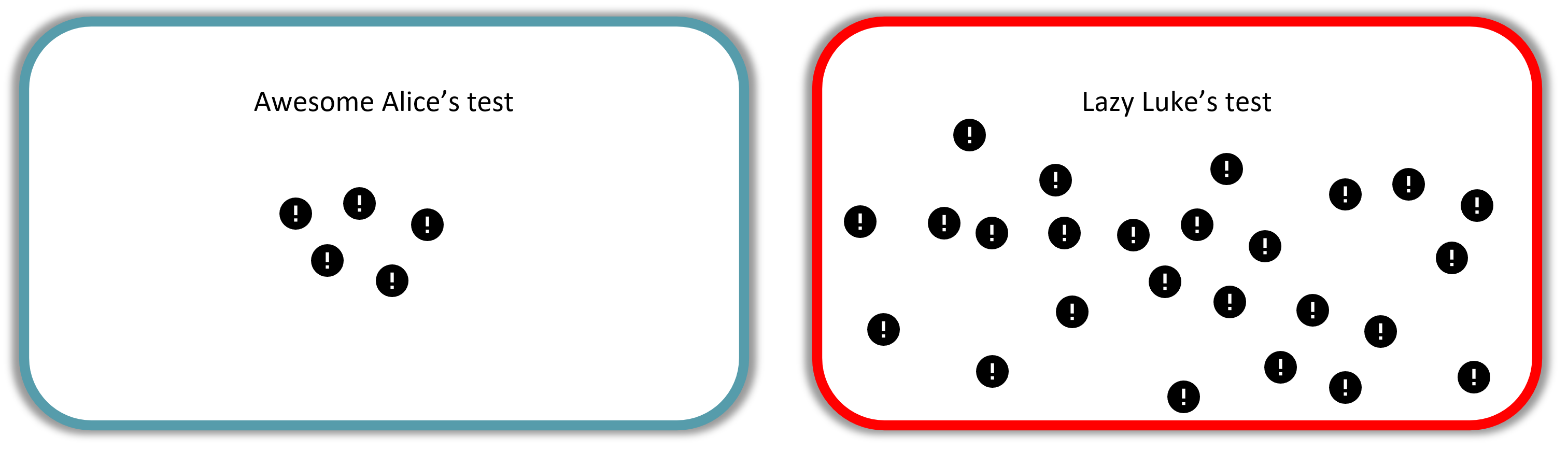

Awesome Alice is awesome, and finds all 5 vulnerabilities.

Lazy Luke also finds 5 vulnerabilities, but misses 20 vulnerabilities!

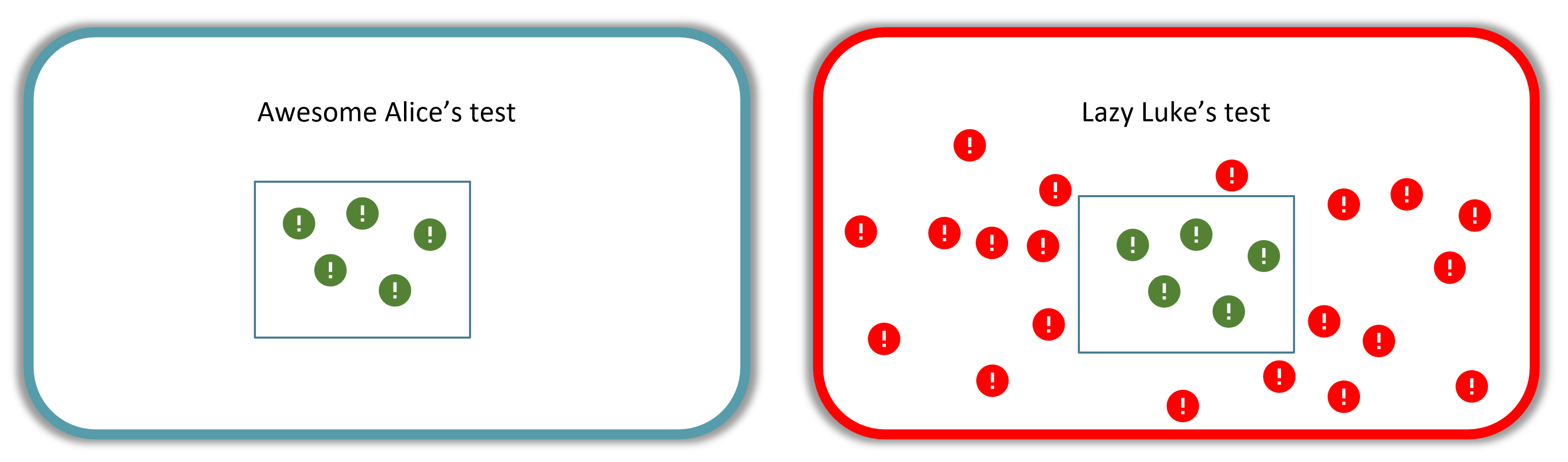

What does the client see? Well the client gets Awesome Alice’s report and see’s 5 vulnerabilities and says “great, we have found these 5 vulnerabilities!” The client is happy with the report and says well done.

The client also gets a report from Lazy Luke. The client sees 5 vulnerabilities in this report and says “great, we have found these 5 vulnerabilities!” The client is happy with the report and says well done.

Since the client has two reports each with five vulnerabilities, the client thinks “what good penetration testers we have!”

The client doesn’t know, though, that Lazy Luke missed 20 vulnerabilities. The client of Lazy Luke then gets popped and ends up in the news, loses millions of dollars, and people go destitute because they lose their life’s savings.

Not a particularly happy ending

In that story the client saw two reports, each with 5 vulnerabilities. The client, though, does not know how many vulnerabilities were in the system that were missed - otherwise they wouldn’t have gotten the penetration test in the first place.

Usually the penetration tester would know, deep down, if they did a good job or not. Economists would call this a “hidden action” information asymmetry problem. The tester and the firm will know whether a good job has been done or not, but the customer may not find out until it’s too late.

What could we possibly do?

When first putting this together, I asked people in the industry how they might look to verify or validate results. I got three recurring answers back. I want to have a look at each of them and what their advantages and pitfalls are. Since I’m fond of seeing loopholes in things, I’d also like to look at it from the perspective of a less scrupulous penetration testing firm (let’s call them Evilfirm) that might look to get past these tests.

Multiple firms with the same scope

You can form a test of multiple firms where they’re given the same application or network to test. You get the results and see who “won”.

The obvious first drawback is the expense. Most organisations have limited budget and it would be a difficult task for an internal manager to go to get budget by saying “well I want to have a test!”.

Even if you have the budget, is the test going to be any good? It’s definitely not a statistically valid test by any standards. Maybe one of the testers got particularly lucky or unlucky. Even if a tester does well in this test, there’s no guarantee you’ll get the same tester for future tests.

Pro tip from Evilfirm: Use our one good pentester for this test, farm the rest to grads.

Have a test scope with known vulnerabilities

Build a lab environment where you already know all the vulnerabilities. Any new company wants your work, they have to do the lab environment first. This is something that was in vogue a while ago for banks and larger companies but fell out of fashion.

Just thinking about this brings me flashbacks from when I took part in creating some CTFs. It’s hard. Really hard. Not just putting in the vulnerabilities, but putting them in in a controlled way is incredibly difficult. I really doubt many organisations would have the skills to do this internally and if they did they might not need outside pentesters.

More than that would be the time requirement. It’s not just creating the environment, but you’re also going to have to maintain it and create enough variety that people can’t cheat. You’d basically need to hire someone full time to make sure the environment is up-to-date.

Let’s take a step back though. Haven’t you now just made a certification that isn’t quite as good as the OSCP? I can guarantee you that they’ve spent more time on their environment than you have.

Pro tip from Evilfirm: Use our one good pentester for this test, farm the rest to grads.

Perform an audit on testing data

After the test have the penetration testing firm provide all testing data such as burp logs, command line logs, scanning files, screenshots, network captures, and first born children and perform an audit to see whether they have done a good job or not.

You know, I’ve been doing this for a long time and I’m not sure I’d be able to do a proper audit. Most organisations wouldn’t have the internal skillset.

The biggest problem is the amount of testing data there can be. Some of the Burp logs can be gigabytes in size. Going through each individual packet would take a huge amount of time, not to mention the enormous expense. At the end of the audit you’d probably not even be sure whether something was missed or not. I’d guess the best you can do is make sure there’s activity. Does that show whether or not a good job was done? Probably not.

Pro tip from Evilfirm: Overload the customer with data. Do a wget -m on every web interface you see, bundle in every little piece of info you can find, and make sure you include the kitchen sink.

Where to from here then?

In the next post I’ll be looking at the “side channels” that we might be able to use to tell whether a tester is good or not. Evilfirm knows about these side channels though. Let’s throw on our black hat and see if we can improve our looks without having to spend money on improving quality.

About the author

Matthew Strahan is Co-Founder and Managing Director at Volkis. He has over a decade of dedicated cyber security experience, including penetration testing, governance, compliance, incident response, technical security and risk management. You can catch him on Twitter and LinkedIn.

If you need help with your security,

get in touch with Volkis.

Follow us on Twitter and

LinkedIn